December 2016

What drives decisions by autonomous vehicles in dire situations?

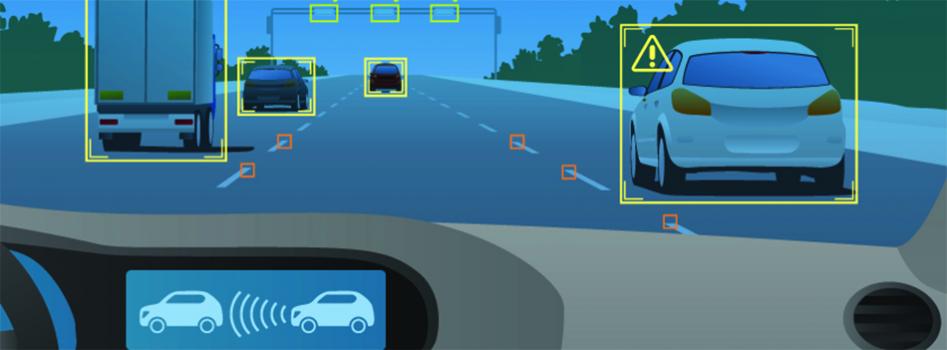

Despite dramatic reductions in accident-related fatalities, injuries and damages, as well as significant improvements in transportation efficiency and safety, consumers aren’t as excited about the promise of autonomous vehicles as the auto industry is. Research shows that people are nervous about life-and-death driving decisions being made by algorithms rather than by humans. Who determines the ethics of the algorithms?

Bill Ford Jr., executive chairman of Ford Motor Co., said recently that these ethics must be derived from “deep and meaningful conversations” among the public, the auto industry, the government, universities and ethicists.

Azim Shariff, Assistant Professor of Psychology & Social Behavior at the University of California, Irvine, and his colleagues – Iyad Rahwan, Associate Professor of Media Arts & Sciences at the MIT Media Lab in Cambridge, Mass., and Jean-Francois Bonnefon, a Research Director at the Toulouse School of Economics in France – have created an online survey platform called the Moral Machine to help promote that discussion.

Launched in May, it has already drawn more than 2.5 million participants from over 160 countries.

Although self-driving cars will reduce the frequency, accidents will still happen. People taking the Moral Machine survey have the opportunity to share their opinions about which algorithmic decisions are most ethical for a vehicle to make.

Thirteen scenarios are presented in which there will be at least one, if not multiple, fatalities. Victims can be passengers, pedestrians or even pets. Humans are characterized by sex, age, fitness level and social status, such as physician or criminal. Participants are asked what they think the car should do in each case.

“We want to gauge where people’s moral priorities are in situations where autonomous vehicles have to weigh the risks of harming different individuals or animals,” Shariff explains. “Do people prefer entirely utilitarian algorithms – where the car prioritizes the greatest good for the greatest number of people? Or do we think it’s more ethical for a car to prioritize the lives of its passengers? Should young lives be valued over older lives?”

Results are tabulated to show each survey taker where he or she falls along a sliding scale of “does not matter” to “matters a lot” for a variety of preferences – including “saving more lives,” “protecting passengers” and “avoiding intervention” – as well as for gender, age, species, fitness and social value. The site also provides the average of all previous responses, so people can see how their ethical intuitions compare to the majority.

“At the end, participants have the option to help us better understand their judgments by choosing to answer additional questions about their personal trust of machines and willingness to buy a self-driving car,” Shariff says. “The research we’ve already published on the ethics of autonomous vehicles has been met with a lot of interest. The international reach of the Moral Machine allows us to vastly increase the breadth of this research and study a much wider array of societies that will be affected in a future dominated by self-driving cars.”

-- Pat Harriman, UCI Communications